So this is the big question:

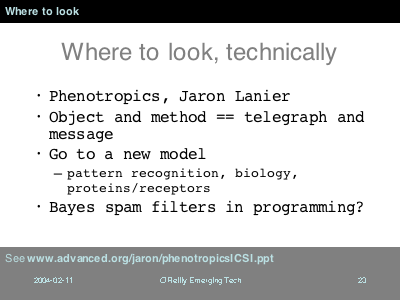

Where do we look to for advice about how cope with these trends? There's a technical perspective and a social perspective.

For the technical stuff I'd recommend Jaron Lanier on phenotropics. Briefly, and I'm abused his ideas to shorten them like this, he describes the current world of computing like this:

- object receiving a method

- like telegraph with a message being sent over it

The problem is that if a message that doesn't conform to the expected template at the other end, that results in a complete failure. We don't learn from it. Instead, says Lanier, look at the models of pattern recognition and how proteins communicate.

By their shape of their surface, proteins disclose their entire structure. The crude features say roughly what they do; the finer features their detailed behaviour. Something else can bind to the protein, deciding what to do based on the shape it detects. The closer the match of shapes, the more abilities come into play. But we've always got fallback options, never failures.

Pattern recognition is statistical. Interfaces can change over time to bind tighter to necessary code modules and avoid ones which result in failure modes. How about if we took the Bayes methods used in spam filtering and used them to include code libraries, instead of directly calling objects and subroutines? You would update one module on a computer, and all scripts that relied on that module would decide whether they could take advantage of it. That's visibility for you.

It's a completely different way of doing computing that we currently only refer to as bugs and crashes. It's worth a look.